The Top Cloud Computing trends to look out for in 2023. It has taken the world by storm

Cloud Computing has become an essential tool for business, a helpful way to store and share data. Every firm was compelled to embrace cloud platforms since remote working options were made possible by the pandemic. Cloud computing trends are popular in areas like application and infrastructure software, business processes, and system infrastructure.

Cloud Computing is an on-demand availability of computer system resources and delivery of computer services including servers, storage, databases, networking, software, analytics, and intelligence over the internet to offer faster innovation, flexible resources, and economies of scale. It is used by every corporation that wants business continuity, cost reduction, and enhanced future scalability. The top cloud computing trends to look out for in 2023 are AI and ML, Kubernetes, Multi and Hybrid cloud solutions, IoT, Cloud Security, and more. As Cloud computing trends are growing, here are the top 10 cloud computing trends to look out for in 2023.

Edge Computing

Edge Computing is one of the biggest trends in cloud computing. Here, data is stored, processed at the edge of the network, and analyzed geographically closer to its source. Faster processing and reduced latency can be achieved due to the increasing use of 5G. Edge computing has major benefits which include more privacy, faster data transmission, security, and increased efficiency. Edge computing will be at the center of every cloud strategy, making it the top cloud computing trend for 2023.

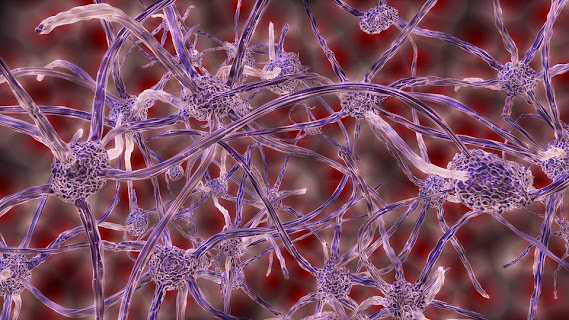

AI and ML

Artificial Intelligence and Machine Learning are two technologies that are closely related to cloud computing. AI and ML services are more cost-effective since large amounts of computational power and storage space are needed for data collection and algorithm training. They are a solution for managing massive volumes of data to improve tech company productivity. The key trends that are likely to emerge in this area include increased automation and self-learning capabilities, greater data security and privacy, and more personalized cloud experiences.

Disaster Recovery

Cloud computing is effective in disaster recovery and offers businesses the ability to quickly restore critical systems in the event of a natural or man-made catastrophe. it refers to the process of recovering from a disaster such as power outages, data loss, or hardware failures using cloud-based resources.

Multi and Hybrid Cloud Solution

A lot of enterprises have adopted multi-cloud and Hybrid IT strategy which combines on-premise, dedicated private clouds, several public clouds, and legacy platforms. They offer a combination of public and private clouds dedicated to a specific company whose data is key business driver, such as insurance, banks, etc. hence, multi and hybrid cloud solutions will be among the top cloud computing trends in 2023 and the coming years.

Cloud Security and Resilience

Several security risks still exist when companies migrate to the cloud. In the upcoming years, investing in cyber security and developing resilience against everything from data loss to the effects of a pandemic on international trade will become increasingly important and a big clouding trend. In 2023, this trend will expand the usage of managed “security-as-a-service” providers and AI and predictive technology to detect risks before they cause issues.

Cloud Gaming

Video gaming services are provided by Microsoft, Sony, Nvidia, and Amazon. But streaming video games require higher bandwidth and can be possible only with high-speed internet access. Cloud gaming will become a significant industry in 2023 with the introduction of 5G.

Kubernetes

The key trend is the increased adoption of container orchestration platforms like Kubernetes and Docker. This technology enables large-scale deployments that are highly scalable and efficient. It is an extensible, open-source platform that runs applications from a single source while centrally managing the services and workloads. Kubernetes are rapidly evolving and will continue to be major players in cloud computing trends over the next few years.

Serverless Computing

Serverless Computing came into the computing industry as a result of the emergence of the sharing economy. Here, compute resources are provided as a service rather than installed on physical servers. This means that the organization only pays for the resources they use rather than having to maintain its servers. In addition, serverless cloud solutions are becoming popular due to ease of use and ability to quickly build, deploy and scale cloud solutions. Overall, technology is an emerging trend that is growing in popularity over the years.

Blockchain

Blockchain is a linked list of blocks containing records and keeps growing as users add to it. Cryptography is used to store data in blocks. It offers excellent security, transparency, and decentralization. It is now increasingly used in conjugation with the cloud. It can process vast amounts of data and exercise control over documents economically and securely. The new technology is becoming a tremendous promise for several industrial applications.

IoT

IoT is a well-known trend in cloud computing. It is a technology that maintains connections between computers, servers, and networks. It functions as a mediator and ensures successful communication and assists in data collection from remote devices. It also resolves warnings and supports the security protocols by businesses to create a safer cloud environment.

website Visit : https://computerapp.sfconferences.com/

Online Nomination : https://x-i.me/conimr15q

.

.